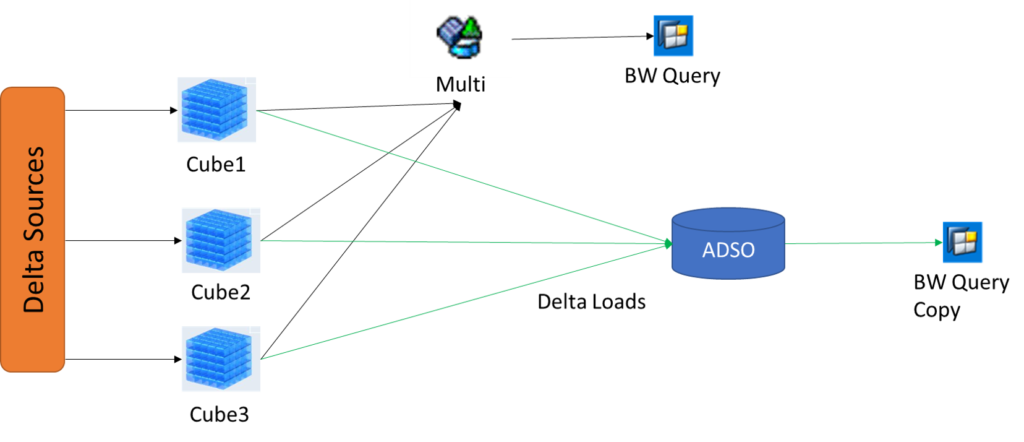

The Challenge: We want to extract data from the BW with a BW Query as a source. The data extraction has to be through an OData service with ODP technology. The extraction should have delta functionalities, to not overload the system with huge full loads. The BW Query is on top of a Multi Provider with only cubes selected. The cubes have no timestamp within the data.

The Problematic: although you can extract from a BW query, there is no chance to enable the delta extraction, as there is no field to refer to. Data can also not be copies to somewhere else with a timestamp, as this will take too much space in the HANA DB.

The Solution: establish a fake delta using the delta data load functionalities of BW.

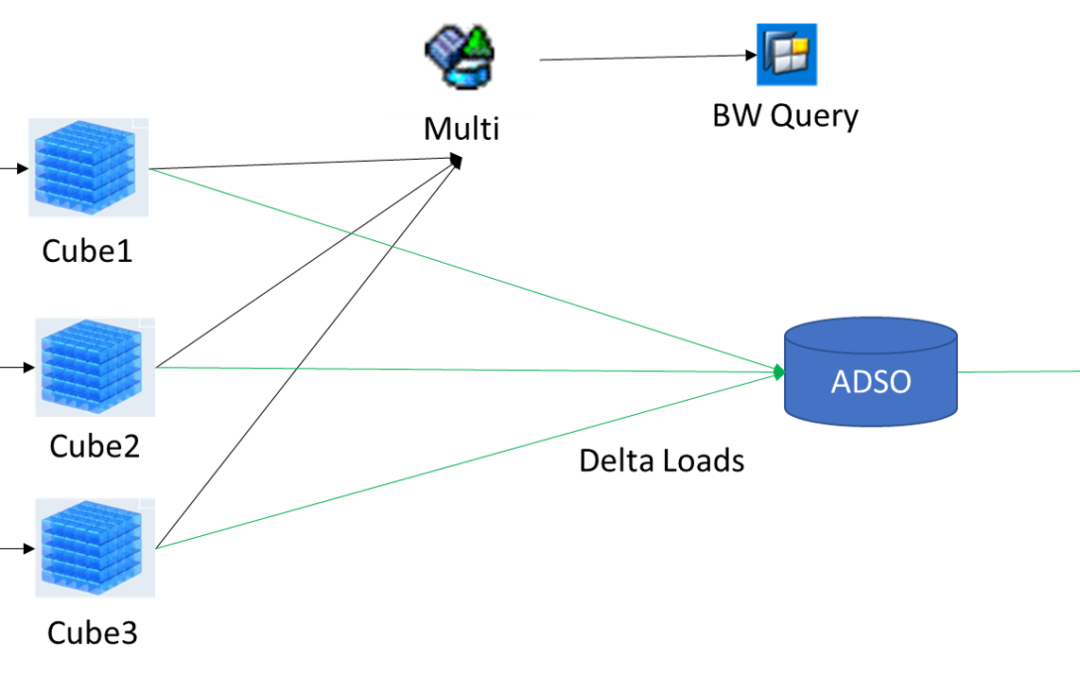

- Create an ADSO with the Cube structure and establish 1:1 load from all Multi Providers.

- Implement a timestamp in the data loading transformation. The timestamp can be part of the key.

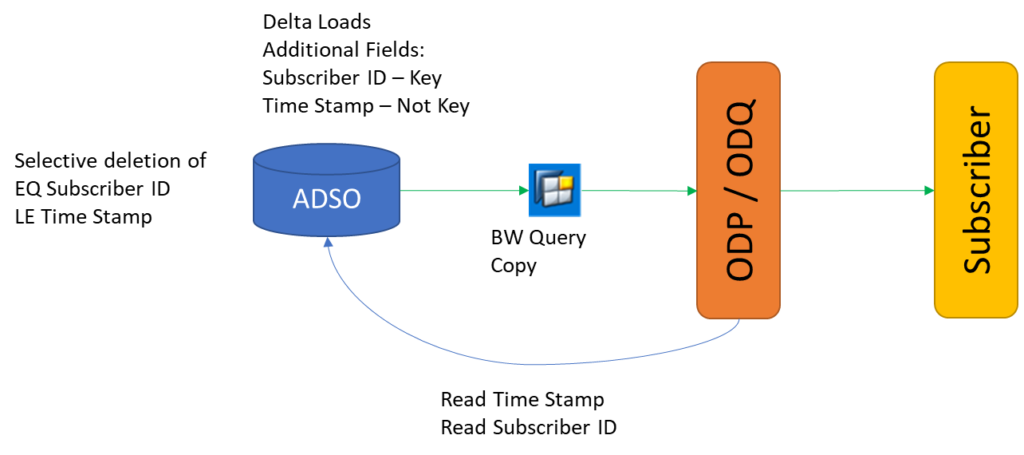

- Copy the query on top of the ADSO. Alternately, create a composite provider on top of the ADSO to make the architecture more flexible for the future.

- Create an OData Service with ODP with full load capabilities on top of the query.

Follow the process to extract the data

- Load data to ADSO

- Load data though OData Service to outside system

- Remove data from ADSO with selective deletion. Important here is not to affect the delta. (another Link)

- Repeat for the subsequent daily loads

The problem with this architecture is that you cannot reset the loads, as the data is deleted. You will have to full load again into the target system.

PUSH to Web with REST

As the data is available and deleted all the time, it is not a good solution to create the extractor outside the SAP system. We need to push the data before deleting it. For this, a REST Endpoint can be created outside the SAP system, to load data into it. The extraction triggering will happen within SAP, which will send the data outside. Tod o this follow this steps

- Create an HTTP client object: You can create an HTTP client object in ABAP using the class

cl_http_client. This class provides methods for sending HTTP requests and receiving HTTP responses. - Create an HTTP request: Once you have created the HTTP client object, you can create an HTTP request using the

create_requestmethod. In the request, you can specify the HTTP method (e.g. POST), the URL of the REST endpoint, and any headers or parameters required by the endpoint. - Set the request body: If your REST endpoint requires a request body, you can set it using the

set_request_entitymethod of the HTTP request object. The request body should be in the format required by the endpoint (e.g. JSON, XML). - Send the request: Once you have set up the HTTP request object, you can send the request using the

sendmethod of the HTTP client object. This method will send the request to the REST endpoint and return an HTTP response object. - Process the response: Once you have received the HTTP response object, you can process the response data as required. The response data may be in the form of JSON, XML, or other formats depending on the REST endpoint.

DATA: lo_client TYPE REF TO cl_http_client,

lo_request TYPE REF TO if_http_request,

lo_response TYPE REF TO if_http_response,

lv_url TYPE string,

lv_query TYPE string,

lt_data TYPE STANDARD TABLE OF <your_table_type>, " Replace with the name of your table type

ls_data TYPE <your_table_type>, " Replace with the name of your table type

lv_json TYPE string,

lv_batch_size TYPE i VALUE 100. " Specify the batch size here

" Define the URL of the REST endpoint

lv_url = 'https://example.com/api/data'.

" Define the name of the BW Query

lv_query = '<your_query_name>'. " Replace with the name of your BW Query

" Create an HTTP client object

CREATE OBJECT lo_client.

" Create an HTTP request object

CREATE OBJECT lo_request

EXPORTING

iv_method = if_http_request=>co_request_method_post

iv_uri = lv_url.

" Retrieve data from the BW Query

cl_rsda_query=>get_data(

EXPORTING

i_query_name = lv_query

i_dtpid = space

i_stepno = 0

i_package_size = 0

i_ignore_signals = abap_false

i_ignore_exceptions = abap_false

IMPORTING

e_data_provider = DATA(lo_data_provider)

e_t_data_range = DATA(lt_data_range)

CHANGING

ct_data = lt_data ).

" Loop through the data in batches

DO ( lines( lt_data ) / lv_batch_size ) TIMES.

" Get the data batch

DATA(lv_from) = ( sy-index - 1 ) * lv_batch_size + 1.

DATA(lv_to) = sy-index * lv_batch_size.

IF lv_to > lines( lt_data ).

lv_to = lines( lt_data ).

ENDIF.

DATA(lt_batch) = lt_data[ lv_from .. lv_to ].

" Loop through the batch and add the data to the request body

CLEAR lv_json.

LOOP AT lt_batch INTO ls_data.

" Convert the data to JSON format

DATA(lv_row_json) = /ui2/cl_json=>serialize( data = ls_data ).

" Add the row to the JSON array

CONCATENATE lv_json ',' lv_row_json INTO lv_json.

ENDLOOP.

" Remove the leading comma

IF lv_json(1) = ','.

DELETE lv_json(1).

ENDIF.

" Set the request body

lo_request->set_request_entity( lv_json ).

" Send the request to the REST endpoint

CALL METHOD lo_client->send

EXPORTING

request = lo_request

RECEIVING

response = lo_response.

" Check the HTTP status code of the response

IF lo_response->get_status_code( ) = 200.

" Success! Do something here if needed.

ELSE.

" Handle errors here.

ENDIF.

ENDDO.

The data here is selected from a BW Query. For each row of data, we convert it to JSON format using the /ui2/cl_json=>serialize method and set it as the request body using the set_request_entity method. We then send the request to the REST endpoint using the send method and check the HTTP status code of the response.

You’ll need to replace <your_table_type> with the name of your table type and <your_adsotype> with the name of your ADSO type, and <request_id> with the ID of the request.

The variable lv_batch_size specify the batch size (in this case, 100 rows per batch). We then loop through the data in batches using the DO ... TIMES statement and select the data for the current batch using the lt_data[ lv_from .. lv_to ] syntax.

For each batch of data, we loop through the rows and convert each row to JSON format as before. However, instead of adding each row to the request body, we concatenate all the rows into a single JSON array. We then set the request body to the JSON array and send the request to the REST endpoint.

Note that this code assumes that the REST endpoint expects an array of JSON objects as the request body.

PUSH to Web with Open Hub

To push data from SAP BW to a REST endpoint using Open Hub, you can follow these steps:

- Create an Open Hub Destination: Go to transaction

RSA1and create a new Open Hub Destination. In the Destination tab, specify the name and description of the destination and the URL of the REST endpoint as the target system. - Define the Data Source: In the DataSource tab, select the data source from which you want to extract data. Define the data selection criteria and the fields to be included in the data extraction.

- Set the Transfer Method: In the Transfer Method tab, select the transfer method as „Push“ and specify the target data format as „JSON“.

- Map the Fields: In the Mapping tab, map the fields of the DataSource to the target fields of the REST endpoint. If necessary, you can use the ABAP code to transform the data before sending it to the endpoint.

- Activate the Destination: After defining the Open Hub Destination, activate it by going to the Change mode of the destination and clicking the Activate button.

Once you have completed the above steps, data will be extracted from the DataSource and sent to the REST endpoint using the Open Hub Destination. The data will be transformed into JSON format and sent as a POST request to the REST endpoint.

Note that if the REST endpoint requires authentication or any other additional parameters, you’ll need to configure those as well in the Open Hub Destination.

Check ODQ loads to delete data in ADSO

To only load the correct data from the Query, we need to delete the data from the ADSO. This should be available for on subscriber and timestamp level.

The data from ODQ can be read from the tables

- ODQSSN – Operational Delta Queue: Subscription

- ODQDATAXX – other tables

The loads in the ADSO has to be loaded on the Subscription level.

Probably we need another Table to manage the active subscriptions.

Neueste Kommentare